Personal Science Week - 250814 Vibe Coding

More exciting tips and lessons from LLM chatbots

Personal scientists wonder about everything, but until recently it was too difficult or time-consuming to get custom answers to our specific questions. LLMs, especially the newly-updated more powerful versions are dramatically increasing the number of answers we can get.

This week we’ll look at some specific examples, along with lessons and suggestions for doing your own “vibe coding” and more.

Back in PSWeek250703 I mentioned one of my first forays into what is often referred to as “vibe coding”, using an LLM to generate and run programming code from scratch based on plain English dialog with the chatbot. Then, I resurrected an old software engineering project, Seth Robert’s Brain Reaction Time, and was blown away by the results. (You can run my vibe-coding version at brt.personalscience.com ).

Since then I’ve been doing much more vibe coding, to the point where I get seriously rabbit-holed every time I read a news article/blog post/book and wonder how a fact relates to something the authors didn’t quite explain.

Example 1

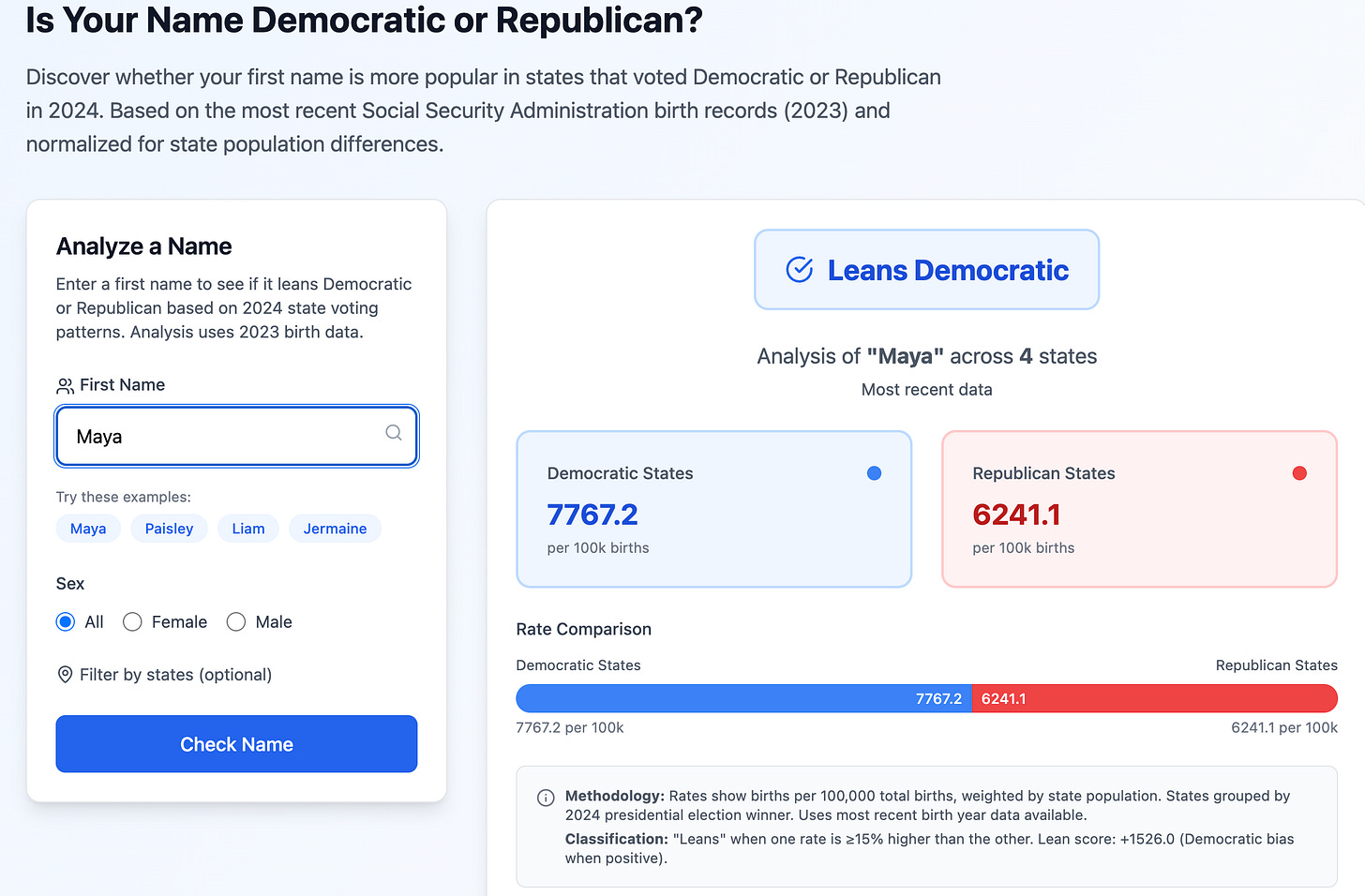

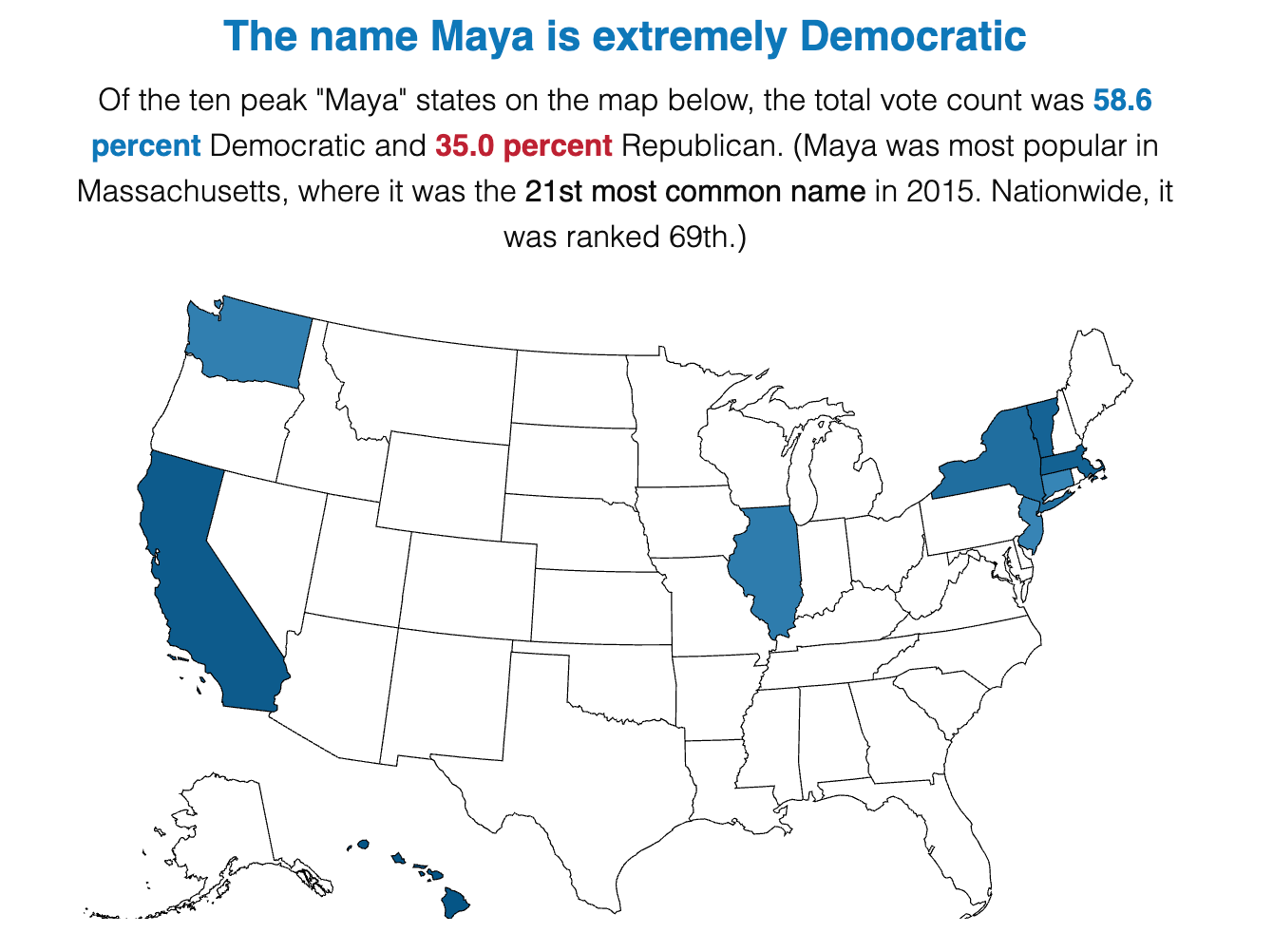

I recently bumped into an old Time article about baby names’ relationship to political affiliation. It included a text box that let you enter any name to get something like this:

But the Time article was almost ten years old. Do the results still mean anything?

In a few minutes of vibe-coding I was able to generate this updated version using 2024 data, which shows that apparently this name is becoming less partisan:

It’s especially empowering to post the app online to make it available to anyone on the internet. Vibe-coding apps like Bolt make that especially easy.

But for your own experimentation you don’t need to make an interactive version. When data visualization is this easy, you can just do it for your own purposes as a way to understand some data for yourself.

Example 2

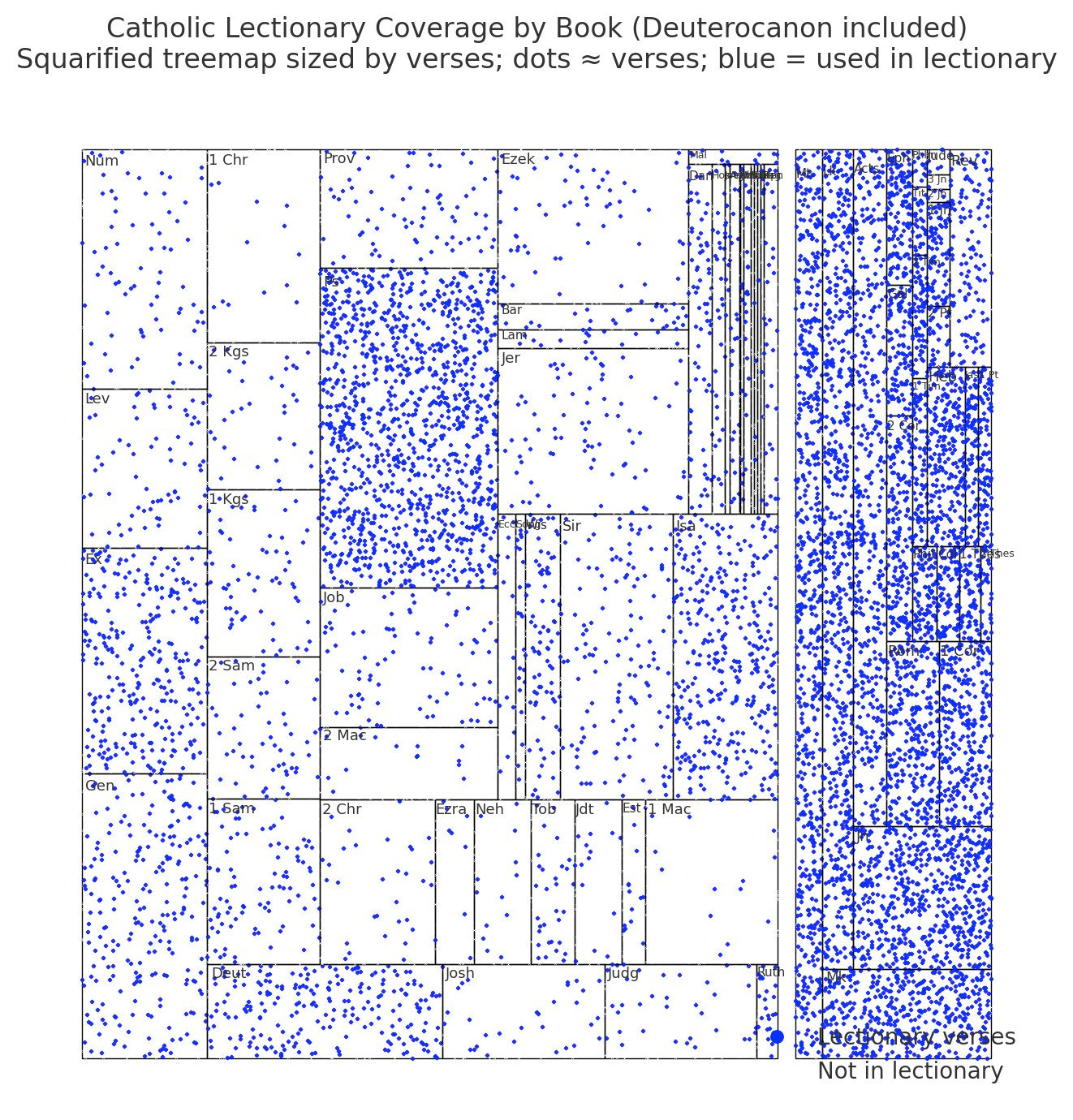

The Catholic Church publishes a lectionary of specific Bible verses to be read at each service (Mass) throughout the year. Although they skip parts that are long, repetitive, or are otherwise inappropriate (e.g. genealogies), the intent is that somebody who attends every Mass will read a significant portion of the Bible after three years.

But is that a lot, or a little? And percentages alone mean little if in fact the readings cover only the same portion of the text.

So I asked ChatGPT-5 to generate a plot showing word-by-word in order how much of the Bible is represented. Here’s the answer (after a few seconds of typing the prompts)

You can do this easily with any book, in any language. Want to compare versions of Shakespeare’s plays? do a frequency analysis of anachronistic word choices in the TV series Downton Abbey? It’s time to stretch your imagination to places you never considered.

Why Wonder?

Pay attention to your conversations with interesting people and I bet you’ll be surprised how many sentences begin with “I wonder what/why/how…” With LLMs you no longer need to wonder—get the answer immediately, usually so quickly it needn’t interfere with the flow of the conversation.

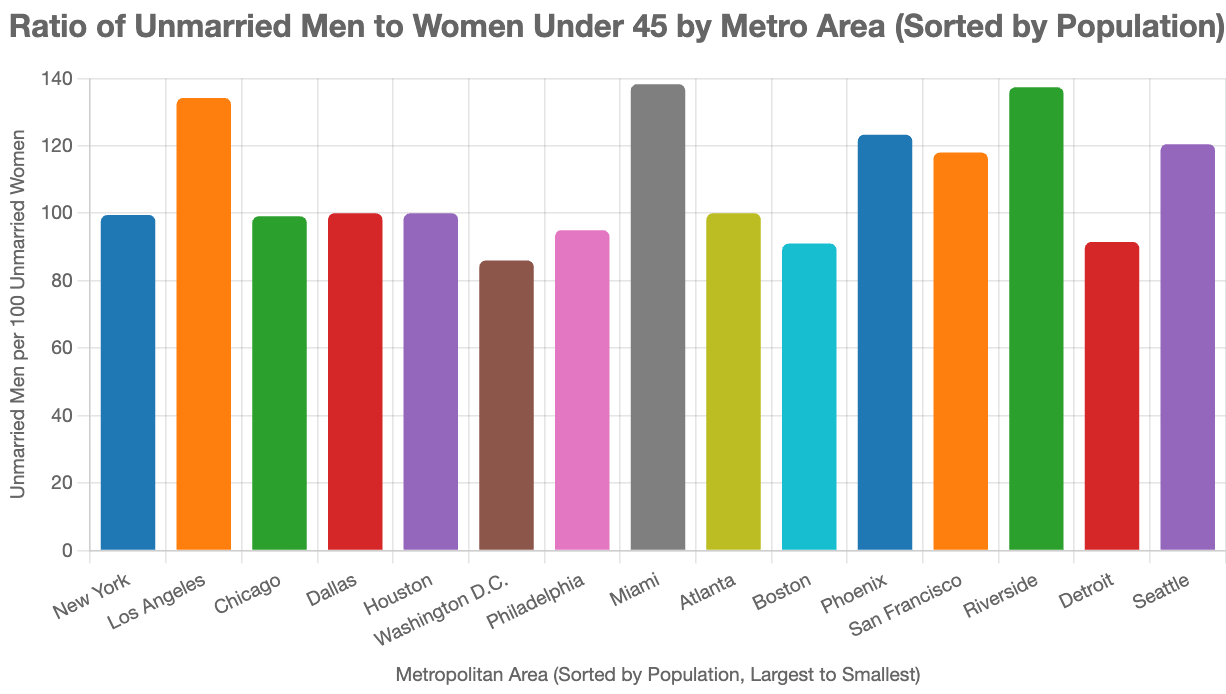

While reading a recent newspaper article about the high ratio of unmarried men in Seattle, my wife wondered how this compares to other metropolitan areas. In literally less than 15 seconds I made this:

All of the top LLMs let you speak your question, with little or no lag time, so rather than speculate about something I’m finding it more convenient to simply pick up my phone and settle the issue immediately right in the middle of the conversation.

Lessons and Tips

Here are some of my top learnings from using LLMs regularly for vibe-coding and more.

Don’t write the prompt yourself. Instead use “meta-prompting”: explain your problem to one chatbot and get it to write a more thorough prompt. You can edit the full prompt again before throwing it into the coding LLM, but

Use Claude Artifacts (or their equivalent on your favorite LLM). Often you don’t need to vibe code at all. The “frontier” LLMs (ChatGPT, Claude, Grok, etc.) are getting better all the time, enough that you usually needn’t bother running a separate full-blown programming environment.

Don’t be cheap. My $20/month Claude subscription is the best money I’ve ever spent. Yes there are free versions, but for any serious use you don’t want the limitations of free. Get the annual plan for $17/mo.

Grok is under-rated. I find it about as good as the (paid) version of ChatGPT, only much cheaper ($8/month instead of $20/mo). I paid under $200 for a yearly subscription to X Premium + (no ads, full access to SuperGrok).

Don’t be afraid to use the Chinese versions, which are nearly as good and sometimes better than the paid versions from US companies. Unencumbered by US copyright laws, they sometimes have access to data sets that you won’t get from other LLMs. You probably already know about DeepSeek, but you might also try Qwen which is very good.

I haven’t found as much utility in browser-based vibe coding apps like Bolt (which I used to built the BRT app mentioned above), Replit (which I tried long ago), or Lovable (popular, but I haven’t tried it for anything serious). These are probably good options for somebody with no programming experience, but I’ll stick to Claude Code.

Don’t trust the results! As with everything—especially LLMs—we personal scientists need to be extra skeptical. Don’t believe what you see just because something comes with fancy charts or numbers. If you’re not sure the answer is correct, copy/paste the answer into another LLM to double-check.

Personal Science Weekly Readings

Personal Scientist of the Week: Elizabeth Van Nostrand has been trying supplements and letting people guess the results on the prediction market Manifold. When prediction markets become more mainstream and millions of people (or AI Bots?) use them to make bets, it’ll be possible for a normal person to put down a minimal amount of money to get the Great Hive Mind to guess the results of your experiment before you complete it.

Bob Troia (Quantified Bob) tracking glucose and his X reply to Bryan Johnson, who noticed that travel has a significant effect on glucose levels.

And speaking of Bryan Johnson, I liked this review of his recent Don’t Die Summit by Nic Rowan in The Lamp: Less Than Angels. There is a real religious aspect to Johnson’s part of the longevity movement, but Rowan notes that:

every project to purge imperfection from human nature inevitably founders on it, when human fallibility, so glorious and disastrous, drowns our best attempts to lift ourselves out of the sea and into the company of the angels.

The new edition of the Handbook of Social Psychology is now available online and for free. The handbook synthesizes decades of empirical research on how people actually behave, think, and interact—not just how we assume they do. For example, Chapter 14 on Well-Being is an extensive survey of factors that genuinely improve quality of life, which could inform your personal optimization experiments.

Volunteers needed for a new Weight Loss Study from PeopleScience. You agree to wear a CGM and monitor your weight for five weeks while taking the supplement Pivit, “a proprietary and patented formulation of food molecules, designed to act on receptors in the gut to cause a natural secretion of GLP-1 and GIP hormones”. You need a high BMI to qualify and be willing to send them your data.

About Personal Science

Personal scientists are driven by wonder. We notice something intriguing in the world and ask: "What if I could actually test that?" Now, with AI research assistants available 24/7, that curiosity can lead to immediate investigation rather than wishful thinking.

This democratization of research capability means we can finally satisfy those spontaneous "I wonder..." moments that arise in everyday conversation. Questions that would have required days in a library or expensive consultant time can now be explored in minutes. The bottleneck is no longer access to information—it's the quality of our questions.

The key is to maintain scientific rigor even as the pace of discovery accelerates. We still need careful experimental design, proper controls, and healthy skepticism about our results. But now we can pursue these investigations with the enthusiasm of having a tireless research partner who never judges our questions as too weird or too obvious.

We publish Personal Science each Thursday for anyone who believes that curiosity, properly channeled, is humanity's greatest renewable resource. Let us know what questions are sparking your wonder this week.

Experiment on how much geography each model really knows: https://www.lesswrong.com/posts/xwdRzJxyqFqgXTWbH/how-does-a-blind-model-see-the-earth Grok wins if asked directly.