Personal Science Week - 250710 AI-Enhanced Science

More professional tools for personal scientists

Powerful new AI tools are attempting to extend and perhaps replace the science abilities of professionals.

This week we'll continue our look at those tools and their impact on scientific collaboration, plus more thoughts on how personal scientists can take advantage of some of those tools in our own work.

AI Scientists: Promise vs. Reality

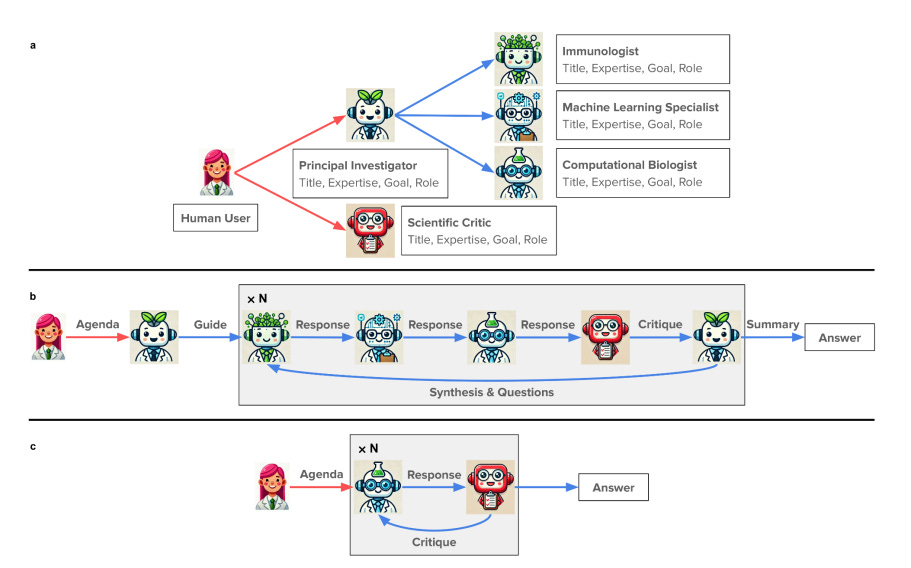

Back in PSWeek250612, we mentioned FutureHouse, an AI platform that hopes to revolutionize scientific research. Now a recent review in Nature adds Virtual Lab, a similar Stanford project that sets up a series of specialized chatbots, each providing a different perspective on a problem (chemist, computer scientist, etc.). The concept sounds impressive: a team of AI experts collaborating on complex scientific questions.

But as one researcher noted: “Maybe it's good to bounce ideas off. But will it be a game changer in my day-to-day? I doubt it.” While these tools excel at synthesizing existing literature, they rarely generate truly novel insights. The real value emerges when you use AI to shore up your weaknesses rather than amplify your strengths.

Rather than waiting for academic institutions to perfect their AI workflows, I've been experimenting with professional-grade coding tools to enhance my personal science projects.

A Personal Example: Building with AI

In last week’s PSWeek250703 I used Bolt to create a simple web app based on Seth Roberts' self-experimentation principles. In about 30 minutes, I had a functional app that would have taken me days or weeks to code manually. Tools like Bolt, or its competitors Lovable, Replit, and a growing number of others, bring professional level programming abilities to everyone.

Meanwhile, software engineering professionals are themselves upping their game with powerful tools like Cursor, Windsurf, and more. You can find YouTube videos showing professionals bang out tens of thousands of lines of high-quality code in minutes.

A typical software project is organized around a “repo”, a repository of files written in the source code of programming languages. A typical repo will have dozens or even thousands of these files which an engineer edits, line-by-line, usually in a specialized text editor called an IDE (Interactive Development Environment). Organizing all those files, and keeping track of which does what, is the skill that separates a beginning developer from a seasoned professional—and an obvious way for AI tools to multiply an engineer's productivity.

But these tools can be applied to more than just coding. For the past few weeks I've been experimenting with Claude Code, one of the professional-grade AI-powered software tools that works with a repo. But rather than use files made of computer programming source code, my repo is a collection of my self-experiment notes. I can have Word or Excel files, text files, PDFs, JPEG images—anything that Claude can handle.

Quantified Self co-founder Gary Wolf started a thread at the QS Forum with tips and tricks for AI-Assisted Workflows for Personal Science with multiple examples. See especially Gary’s technique for integrating Zotero with Obsidian, letting him easily extract metadata and notes from his list of academic papers. You can also see my example using the similar (free) Google Gemini Code for dietary analysis.

I've moved most of my personal science work to this workflow, so expect to hear more about this in future posts.

Practical Guidelines for Personal Scientists

Meanwhile, the power and quality of these tools is changing rapidly. How can you keep up? Nature's February 2025 "Guide to AI Tools" is already obsolete—a sober illustration of how rapidly this landscape changes. Tools that seemed cutting-edge six months ago are now standard features in ChatGPT or Claude. It's a fundamental challenge: by the time you learn to use a specialized AI research tool, something better has probably emerged.

Personal scientists face the additional burden of evaluating not just whether these tools work, but whether they're worth the learning curve investment when simpler alternatives might suffice.

Here are some principles to consider:

1. Use AI to explore unfamiliar domains. If you're strong in biochemistry but weak in statistics, let AI handle the statistical interpretation while you focus on the biological mechanisms. Start with broad questions like "What statistical tests are commonly used for microbiome data?" rather than asking for specific analysis.

2. Always verify specific claims independently. AI tools excel at broad synthesis but frequently hallucinate specific details like dosages, study sizes, or statistical significance levels. Cross-check any numerical claims with original sources.

3. Prefer multiple AI opinions over single sources. Just as you wouldn't trust a single study, don't trust a single AI analysis. Compare results across different platforms and models—I routinely check the same question with Claude, ChatGPT, and Perplexity.

4. Focus on methodology over conclusions. AI tools are better at explaining how studies were conducted than interpreting what they mean for your personal situation. Ask "How was this study designed?" rather than "Should I take this supplement?"

Keep in mind that AI only answers questions that you ask. The danger is that making it so easy to ask questions, maybe it prevents you from the underlying structure that you really should be paying attention to instead.

A short essay from evolutionary scientists Heather Heying and Bret Weinstein recommends Don’t Look It Up:

What harm can come of looking up answers to straightforward questions? The harm is that it trains us all to be less self-reliant, less able to make connections in our own brains, and less willing to search for relevant things that we do know, and then try to apply those things to systems we know less about.

Their advice: rather than facts, learn to use tools, including intellectual ones like the ability to carefully observe. And especially, don’t rely on online tools for why questions.

Personal Science Weekly Readings

Speaking of science and AI, Deepmind researcher Ed Hughes proposes “Popperian AI” that uses LLMs to propose falsifiable theories, write experiments to test them, and recursively improve itself. This isn't just academic speculation. Sakana's AI Scientist has already cranked out auto-generated workshop papers, and AlphaEvolve discovered a more efficient matrix multiplication algorithm. Also see their Darwin-Gödel Machine, recursive system that modifies itself while evaluating its own performance. It's achieving state-of-the-art results on software engineering benchmarks without requiring massive computational resources—the kind of breakthrough that makes you wonder what else these systems might figure out that we've missed.

Speaking of critical thinking, researchers studying 8,500 people found that astrology believers tend to have lower intelligence and education levels, regardless of religious or spiritual beliefs. Interestingly, people with right-wing political beliefs were slightly more skeptical of astrology than others—a reminder that scientific skepticism doesn't align neatly with political categories.

David Chapman also asks the good question Do we know the Higgs Boson exists?. In these huge bureaucratic science projects, no single human really understands everything involved, and essentially even domain experts have to “take their word for it”. This is a very different kind of scientific result than, say, the conclusion that the earth is 93 million miles from the sun, which the rest of us can understand and even prove for ourselves.

Finally, researchers found that exercise slows our perception of time—participants underestimated elapsed time by 2-10% during physical activity. This offers an interesting self-experiment opportunity: try timing your workouts subjectively versus objectively to see if you experience this effect.

About Personal Science

The question isn't whether AI will transform scientific research—it already has. The question is whether we'll maintain the critical thinking skills necessary to distinguish genuine insights from sophisticated-sounding nonsense. For personal scientists, this balance between leveraging AI capabilities and preserving human judgment may be the most important skill to develop.

As always, our motto remains: be open to new ideas, but skeptical. Whether the source is a peer-reviewed journal, an AI chatbot, or your own data, the same standards of evidence apply.

How are you using AI for your personal science work? Let us know.

This is really cutting edge, I’m excited to hear about more examples / insights that a Claude code repo enables!

Are you using a Pro subscription, and do you find that is able to handle all of your files?

- LLMs are tremendously useful for software development, but "banging out [...] lines of high-quality code" is typically not the bottleneck for most software developers.

- People may be "skeptical" of astrology not because they have a scientific mindset, but because it conflicts with their religious beliefs.