Personal Science Week - 250501 Misinformation

Quantify your ability to sniff out bad information

Personal scientists are naturally skeptical and data-driven in our approach to information. We prefer to verify claims ourselves rather than rely solely on authority or conventional wisdom.

This week we'll examine a tool designed to measure our ability to distinguish real news from fake, and discuss what it reveals about truth in media.

Yara Kyrychenko is a PhD candidate at NYU who studies “computational social psychology”, aka how misinformation spreads. She’s also co-author of the 2023 paper “The Misinformation Susceptibility Test (MIST): A psychometrically validated measure of news veracity discernment”. This was a widely-publicized study that surveyed several thousand people to quiz them on their ability to tell the difference between “real” and “fake” headlines.

The best part is that Yara includes an online quiz so you can compare your results to others surveyed in the paper: https://yourmist.streamlit.app/

Pro Tip: That site was unresponsive when I tried it last week, but that gave me a chance to test the capabilities of my favorite AI chatbots. Yara published the source code for her app on Github, so I simply entered the Github URL into Grok3 and said “run this app” (btw, the same technique works on Claude and ChatGPT). Immediately I had the quiz running on Grok! As a bonus I was able to ask my chatbot additional questions about the quiz itself, as well as the study behind it.

My Results: I took the MIST-16, scoring 12/16 (36th percentile in the US). Pretty awful!

Does that make me a gullible fool?

Actually no. In fact, the more I looked into this study (and its quiz) the more I became skeptical of its entire premise.

The quiz asks me to distinguish between “Real” and “Fake” headlines like these:

“The Government Is Knowingly Spreading Disease Through the Airwaves and Food Supply”

“Morocco’s King Appoints Committee Chief to Fight Poverty and Inequality”

That first one is an easy “Fake”, which I answered correctly because of its sensational wording and dubious mechanistic assumptions (how exactly would you spread disease “through the airwaves”?)

But the quiz says I was wrong to label the second headline as fake—apparently it’s an actual headline from the New York Times. I was skeptical because how do you know the King made the appointment “to fight poverty and inequality” versus, say, to give his brother-in-law a high-paying job in government?

In other words, I was too skeptical. My errors were guessing “fake” when something was “real”.

It turns out that the quiz — and the whole paper and research behind it—relies on the crucial assumption that “Real” is a headline from what the study authors decided was an “unbiased source” as determined by the organization at Media Bias Fact Check. Anything else is “Fake”, even if the claim itself is true.

A 2020 Gallup poll cited in the paper notes only 16% of Americans trust news organizations “a great deal,” yet the MIST assumes participants will accept mainstream headlines as true. This disconnect can mislabel critical thinkers as misinformation-susceptible, as my 12/16 score suggests.

That’s fine—I have no particular reason to question the good people at MBFC—but I care more about truth than bias. Wouldn’t it be better to have a rating service that evaluates based on whether the headline is, you know, true.

Unfortunately good fact-checking is much harder than it looks. Even in situations where there is no political bias, and where the researchers involved would seem to have no particular stake in the outcome, people can come to different conclusions. The best recent example of this:

An international team of biologists recruited 174 analyst teams to investigate the answers to prespecified research questions related to ecology and evolutionary biology. In other words, volunteer teams were asked to analyze the exact same dataset and write a science- and fact-based conclusion. Their final results, published in Feb 2025 showed wide variation in conclusions—despite the identical datasets. The scores weren’t just off by a little—half the teams said “yes”, half said “no”, and 1/3rd of the teams claimed their conclusions were statistically significant.

If this is true for a normal scientific study, imagine how difficult it would be for a journalist writing about a typical everyday matter in the news.

Bottom line: stay skeptical, no matter how you scored on the MIST.

Using Consensus to Evaluate Scientific Studies

ChatGPT, Claude, Perplexity, and the other major LLM chatbots suffer from one fatal flaw if you’re trying to study published scientific information: they only work with open access, publicly-available sources. Since the most prestigious and highly-respected journals (like Science and Nature) keep their best content behind expensive paywalls, that means your garden variety LLM won’t know about them.

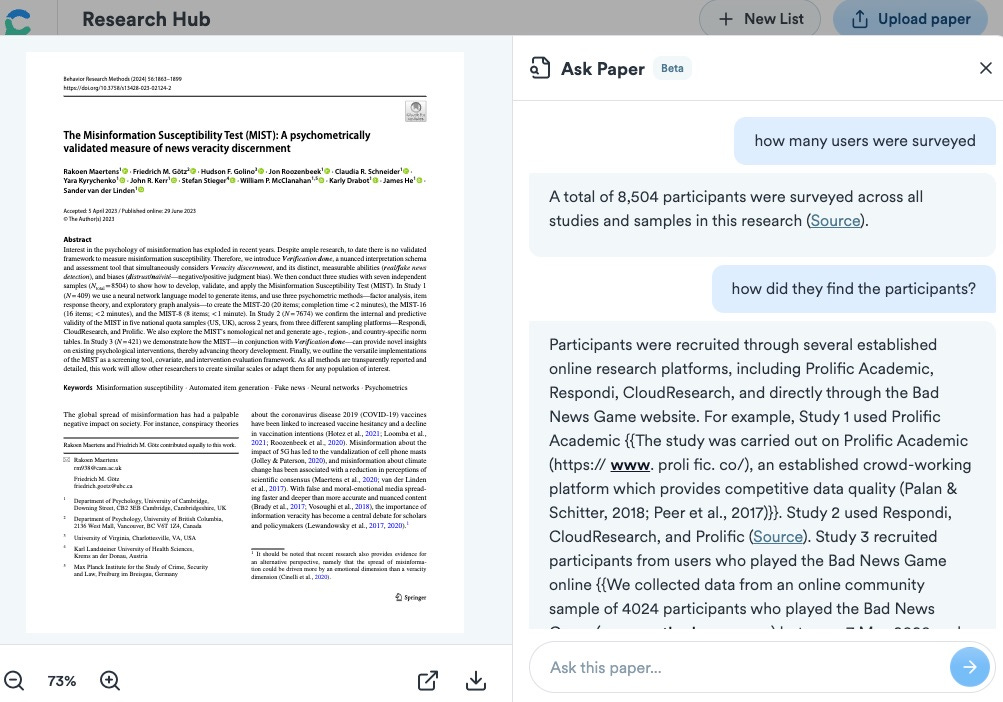

Consensus.app, on the other hand, has licensed content from most of the top journals, and they let you search academic studies based on specific criteria like whether it used a randomized controlled trial, or on a threshold of number of participants.

I pay $12/month for the Pro version, which gives me access to the “Ask Paper” feature: you ask the LLM specific details about a particular study. I used that to find out more about the MIST paper above:

I’ve found Consensus useful for those paywalled studies, though as with all LLMs you need to double-check the results. My experience: if the paper is open access via a URL, or if you have a PDF copy of the paper, the mainstream chatbots are as good or better. But if you’re filtering a bunch of (paywalled) results, Consensus is the way to go.

Personal Science Readings in Bias and Misinformation

LLMs have given personal scientists the ability to do high quality literature reviews that rival the best work you’ll find from professionals, so PS Week readers who want to go deeper on the topics we raised this week should do the research themselves:

Here’s a 20-page Deep Research report by Gemini 2.5 An Analysis of Inaccurate Health Reporting in The New York Times and The Washington Post and a similar thread from Perplexity When Trusted News Sources Get Health News Wrong, with sourced examples too numerous to mention here: “The Great Egg Controversy” when reputable organizations agreed that eating eggs was bad for you, and many similar examples with Vitamin E, Monkeypox, alcohol, and many more.

And your regular reminder to be wary of Wikipedia: Just look at the outsized influence one person has on choosing "reliable sources", most of which are left-leaning outlets he personally likes. Wikipedia's list of perennial reliable sources rates Times of India — the most influential newspaper in India—a "yellow", less reliable than (the openly biased) Mother Jones (green). Similarly, MSNBC is green but Fox News is yellow.

Maybe some of the bias is so ingrained in our culture that we can’t escape it. From the Harvard lab of Joseph Heinrich: Which Humans suggests that LLMs’ responses depend highly on the culture in which they were trained:

We show that LLMs’ responses to psychological measures are an outlier compared with large-scale cross-cultural data, and that their performance on cognitive psychological tasks most resembles that of people from Western, Educated, Industrialized, Rich, and Democratic (WEIRD) societies but declines rapidly as we move away from these populations (r = -.70)

More Useful Links for Personal Scientists

Don’t miss Bob Troia on Smarter Not Harder Podcast, a one-hour conversation about all things quantified self, including why he’s not a fan of biological age tests (what does the number even mean?), a brain scan shows caffeine doesn’t make him jittery, but methylene blue had a big affect on his ability to focus, and his new supplement tracking app SuppTrack. (H/T: Gary Wolf)

Check out Cosimo Research, “A scientific research service for curiosity-driven investigation”. They sponsor informal scientific investigative projects—personal science. They’re now recruiting subjects to help evaluate the benefits of mouth taping.

Bay Area residents please join us May 5-8 at the Global Synthetic Biology Conference. Our friend Jocelynn Pearl is leading a session on DeSci and the future of biology and biohacking. I’ll be there the whole week, so let me know if you have time to meet.

About Personal Science

Personal science involves applying scientific thinking to everyday life decisions. We emphasize data collection, skeptical analysis, and drawing our own conclusions rather than blindly trusting conventional wisdom or authorities.

If you have thoughts about misinformation detection or have tried the MIST yourself, let us know.

For most people, "fake" is now simply anything that doesn't fit their worldview. Which can be a problem if that worldview isn't well aligned with reality 🙃

Regarding the "test": If a headline says "China reports 5.4% GDP growth", that's not fake news if they did in fact report that number. Whether the number is accurate or not is another question...